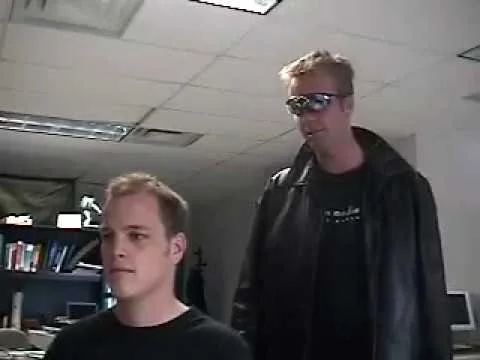

At the intersection of social interaction and wearable computing, ECS Glasses explored how devices can detect when someone is looking at you, rather than just when you are looking at a device. The prototype—described in “Eye Contact Sensing Glasses for Attention‐Sensitive Wearable Video Blogging”—used a pair of eyeglasses augmented with a custom eye-contact sensor and wireless streaming functionality.

When the system detected another person’s gaze directed at the wearer, it could trigger contextual behaviours: for example, switching camera modes, flagging attention events, or logging social-attention metrics for use in wearable video and lifelogging applications.

Highlights

Demonstrated a novel wearable system that senses when others are looking at you—a reversal of the typical “you are looking at the screen” model.

Integrates gaze-detection and wearable electronics into eyewear form-factor, anticipating social and device-aware interactions in future wearables.

Published work provides the technical basis and design rationale for attention-sensitive wearables.

This work underscores my ability to design and build systems that sense social context, bridge hardware and interaction design, and anticipate emerging attention-aware paradigms in wearable technology.

References

ACM CHI. Vienna, Austria. 2004.

Connor Dickie, Roel Vertegaal, Jeffrey S. Shell, Changuk Sohn, Daniel Cheng and Omar Aoudeh.

Available: ACM - eyeBlog

Media: link (Boingboing.net), link (Slashdot.org)

Augmenting and Sharing Memory with EyeBlog

1st ACM CARPE, ACM Multimedia. New York, New York. 2004.

Connor Dickie, Roel Vertegaal, David Fono, Changuk Sohn, Daniel Chen, Daniel Cheng, Jeffrey S. Shell and Omar Aoudeh.

Available: ACM - eyeBlog 2.0

Media: .pdf (Globe & Mail)

![ecsglasses[1].jpg](https://images.squarespace-cdn.com/content/v1/59dbc20ce3df281768a7c53e/1507679020128-KKBL180Q4Z8NWG0DREXN/ecsglasses%5B1%5D.jpg)

![ecsglasses[1].jpg](https://images.squarespace-cdn.com/content/v1/59dbc20ce3df281768a7c53e/1507690687032-B1UAZ9LVAX4YYFCXHJUZ/ecsglasses%5B1%5D.jpg)